In today's fast-paced business world, data is everywhere. It's the fuel that drives decisions, powers new technologies, and helps you understand your customers. But just having a lot of data isn't enough. Imagine trying to build something amazing with faulty materials – the results wouldn't be good. The same goes for data. This blog post will explore "data quality" – what it means, why it's incredibly important for your business's success, and how you can ensure your data is always working for you, not against you.

What is Data Quality?

Data quality is about how well data meets certain standards like accuracy, completeness, consistency, timeliness, and relevance. In the context of data analytics, it helps determine whether the data is good enough to be used for analysis, reporting, or decision-making.

Data issues such as missing values, duplicates, or outdated information can lead to wrong conclusions and poor business decisions. Fixing these issues is important for keeping operations smooth and reducing risks.

As more companies use automation and artificial intelligence, the need for high-quality data becomes even more important. If the data going into a system is poor, the output will also be unreliable.

To manage this, businesses use tools and processes that check for errors, clean up records, and track the quality of data over time. These steps help make sure the data stays useful and trustworthy.

In simple terms, good data quality allows teams to work faster, avoid mistakes, and build strategies based on facts instead of assumptions.

Data Quality vs. Data Integrity

While data quality and data integrity are closely related, they refer to different aspects of data management. Understanding the difference is important for building a strong data foundation.

- Data quality refers to how accurate, complete, consistent, and up-to-date the data is. It answers questions like: Is the data correct? Is anything missing? Can it be trusted for reporting and decision-making?

- Data integrity focuses on the reliability and security of data throughout its lifecycle. It ensures data remains unchanged, properly linked, and protected from unauthorized access or corruption.

Key Difference:

- Data quality is about how good the data is.

- Data integrity is about how secure and stable the data is over time.

Both are essential. High data quality allows teams to use the data effectively, while strong data integrity ensures that the data remains safe and dependable throughout its use.

Why is Data Quality is Important?

Data quality matters because every business decision, report, or automated process depends on having reliable information. When data is accurate and well-managed, it becomes a strong foundation for daily operations, strategy, and long-term growth.

Poor-quality data can lead to costly mistakes. For example, if a business runs a marketing campaign with incorrect customer information, it wastes time and money and may lose customer trust. Inaccurate financial data can result in reporting errors or compliance issues. Even small problems, like missing values or duplicate records, can lead to confusion or delays.

Good data quality allows teams to make decisions with confidence. It supports better forecasting, helps improve customer experiences, and makes systems like automation and AI work more effectively. In short, clean data reduces risk and increases efficiency.

As businesses collect more data from different sources, maintaining quality becomes even more important. Without proper checks, data problems can quickly grow and impact performance across departments.

Strong data quality is not just helpful, it is essential for using data in a way that brings real value.

9 Popular Data Quality Dimensions and Characteristics

To evaluate how good the data is, businesses rely on specific characteristics, also called dimensions of data quality. These help assess whether the data is suitable for its intended use. Below are nine widely accepted dimensions used to measure and maintain data quality:

- Accuracy

Accuracy means the data reflects the real-world values it represents. For example, a customer’s phone number or address should be correct and up to date.

- Completeness

Completeness checks whether all required information is present. Missing data in important fields, like product details or transaction dates, lowers completeness.

- Consistency

Data should remain the same across systems and records. For example, a customer’s status should not be listed as “active” in one system and “inactive” in another.

- Timeliness

Timeliness measures how current the data is. Outdated data can lead to poor decisions, especially in time-sensitive situations like inventory or delivery tracking.

- Validity

Validity checks whether the data follows the correct format or values. A date field should only contain real dates, and a percentage field should not go above 100.

- Uniqueness

Uniqueness ensures there are no duplicate entries. For example, a single customer should not have multiple records with different IDs.

- Relevance

Data should be useful for the task at hand. Even accurate and complete data may be unhelpful if it doesn't apply to the business question being asked.

- Integrity

Integrity focuses on how well data is connected. For example, if a sales record links to a customer ID, that ID must exist and be correct in the customer table.

- Accessibility

Data should be easy to find and use by those who need it, while still following security rules. If it’s hard to access, even high-quality data loses value.

These nine dimensions help organizations measure how trustworthy and useful their data is. Focusing on them ensures the data supports accurate, consistent, and reliable outcomes across all business functions.

Benefits of Good Data Quality

Good data quality brings real value to businesses by making sure that the information they rely on is accurate, complete, and dependable. Here are the key benefits:

- Better Decision-Making

High-quality data leads to insights that are more accurate. This helps leaders make decisions based on facts, not guesswork.

- Improved Operational Efficiency

Clean and reliable data reduces the need for rework, manual corrections, and unnecessary back-and-forth. It saves time and resources.

- Increased Customer Trust

When customer data is accurate and up to date, companies can deliver personalized and timely communication. This builds trust and improves the customer experience.

- Stronger Compliance

Many industries require data accuracy for legal and regulatory reasons. Good data quality helps organizations stay compliant and avoid fines or penalties.

- Effective Use of AI and Automation

AI and automation tools rely on quality input. When the data is clean, these tools perform better and produce more reliable outcomes.

- Lower Costs

Poor data can lead to errors that are expensive to fix later. High-quality data helps avoid these costs by preventing issues early.

- Faster Reporting and Analysis

With fewer errors or gaps to fix, teams can generate reports and insights more quickly. This speeds up decision-making and execution. Data Visualization can further simplify complex data for quicker insights.

- Scalability

As businesses grow, managing large volumes of data becomes easier when the foundation is already strong. Good data quality supports long-term growth.

Investing in data quality is not just a technical task; it is a business priority. It enables companies to move with confidence, reduce risks, and unlock the full value of their data. Whether you're building reports, launching products, or adopting AI, everything works better when your data is trustworthy.

Top Data Quality Challenges

Maintaining high data quality is not always easy. As data moves through systems, teams, and tools, several challenges can arise. Here are the most common data quality problems organizations face:

- Incomplete Data

Missing fields in customer forms, product details, or transaction records are common. Incomplete data reduces the value of datasets and often blocks key processes.

- Duplicate Records

The same customer, supplier, or entry appearing more than once can lead to confusion, inflated numbers, or duplicated efforts across teams.

- Inaccurate Information

Errors can come from manual entry, outdated sources, or integration issues. Even small inaccuracies can lead to wrong insights or failed communications.

- Inconsistent Formats

When data is collected from multiple systems, formats can vary. Dates, currencies, or product codes might not match, making analysis difficult.

- Outdated Data

Over time, data becomes less reliable. For example, customer contact details or inventory status may no longer be valid, which can affect decision-making.

- Lack of Standardization

Without clear rules or naming conventions, data entered by different teams may be messy or hard to use. This makes reporting and automation harder to manage.

- Disconnected Systems

Data stored in isolated tools or platforms often leads to fragmented information. Without integration, it’s hard to see a full picture or ensure consistency.

- Human Errors

Manual data entry is still common in many businesses and often leads to mistakes that can take time to fix and may go unnoticed.

- No Clear Ownership

When it’s unclear who is responsible for maintaining data quality, issues tend to pile up. Without accountability, data quality efforts often lose momentum.

Addressing these challenges requires a mix of the right tools, clear processes, and a culture where data quality is treated as everyone’s responsibility. Fixing these issues early helps prevent larger problems down the line and supports better decision-making across the business.

Common Techniques for Managing Data Quality

Maintaining high data quality requires a set of practical techniques that help identify and fix issues while keeping data reliable over time. Below are the most commonly used techniques in data quality management:

- Data Profiling

This involves scanning your data to understand its structure, patterns, and potential issues. It helps identify missing values, duplicate records, and outliers early.

- Data Cleansing

Also known as data cleaning, this is the process of fixing errors like typos, formatting issues, and incorrect values. It may also involve removing or correcting duplicate entries.

- Validation Rules

These are checks set up to ensure that new data being entered or imported follows specific rules. For example, a phone number must have a certain number of digits.

- Data Standardization

This technique brings consistency to how data is formatted. For example, ensuring that dates follow the same format across all records.

- Monitoring and Alerts

Ongoing monitoring tracks data quality in real time. If something goes wrong, like a spike in missing data, alerts are triggered for quick action.

- Root Cause Analysis

Instead of just fixing surface-level issues, this technique helps teams trace problems back to their source, so they can be addressed permanently.

- Data Governance Policies

These are guidelines and responsibilities assigned to team members to ensure everyone follows best practices for data quality across departments.

Top 10 Data Quality Tools

- Great Expectations

Great Expectations is an open-source Python-based tool for creating and running data validation checks. It supports data profiling, test automation, and generates human-readable documentation alongside your tests.

- Deequ

Deequ, developed by AWS, is an open-source library built on Apache Spark. It allows you to write "unit tests for data" at scale and is ideal for validating large tabular datasets.

- Monte Carlo

Monte Carlo is a no-code, AI-powered data observability platform. It uses machine learning to detect pipeline issues, alert users to anomalies, and trace root causes without the need for manual rule-setting.

- Anomalo

Anomalo uses machine learning to automatically detect data issues based on historical patterns. No need to define rules — just connect your data warehouse and start monitoring key tables.

- Lightup

Lightup allows fast deployment of prebuilt data quality checks across large data pipelines. It supports real-time anomaly detection and AI-based monitoring without writing code.

- Bigeye

Bigeye continuously monitors the health of data pipelines. It uses anomaly detection and root cause analysis to help teams spot and resolve data quality issues before they affect operations.

- Acceldata

Acceldata offers enterprise-grade observability and monitoring for complex data systems. It’s widely used in finance and telecom to track performance and detect issues across multiple layers.

- Datafold

Datafold is an open-source data observability tool that helps teams detect changes in datasets through data diffs and profiling. It integrates with CI/CD pipelines for testing and tracking data quality over time.

- Collibra

Collibra is a no-code, AI-enabled data quality and governance platform. It automatically monitors and validates data, adapting its rules based on machine learning and alerting stakeholders in real time.

- dbt Core

dbt Core is an open-source tool for managing data transformations. It includes built-in data quality testing, allowing teams to validate data at each step of the pipeline during development and deployment.

The right data quality tool helps you catch issues early, build trust in your data, and improve decision-making. Investing in strong data quality practices lays the foundation for smarter, faster business growth.

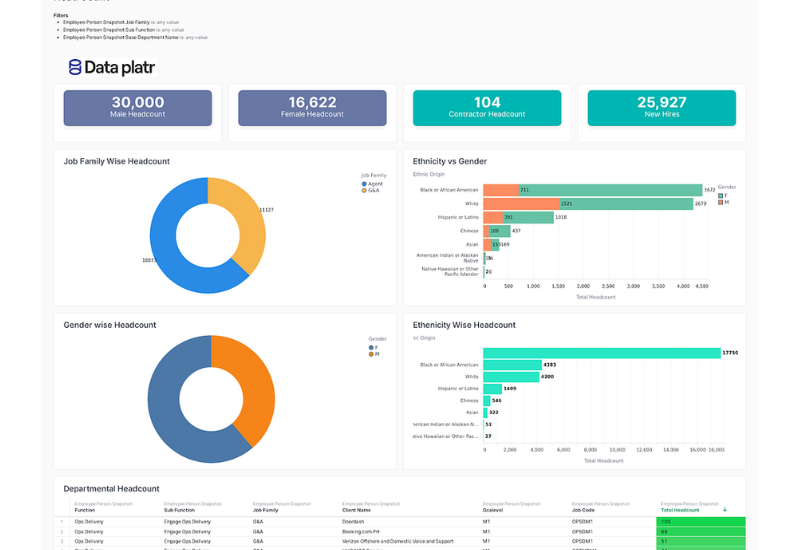

Data Quality with Dataplatr

At Dataplatr, we understand that good decisions start with good data. As a data analytics services provider, we help businesses identify, improve, and maintain the quality of their data across systems, teams, and workflows.

Our approach to data quality focuses on practical outcomes. We work closely with clients to assess where their data stands today, define clear quality goals, and implement the right tools and processes to get there. Whether it’s through automated validation checks, cleansing routines, data profiling, or building governance frameworks, we tailor each solution to the needs of the business.

We also make sure data quality isn’t treated as a one-time task. With growing data volumes and sources, our ongoing monitoring and root cause analysis methods help clients stay ahead of potential issues before they impact operations or decision-making.

From supporting cloud migrations to enabling AI readiness, Dataplatr ensures that your data works for you, clean, complete, and ready to use when it matters most.

Conclusion

Think of good data as the sturdy foundation of a strong building. Without it, everything you build on top – from daily decisions to big plans and smart technology – becomes shaky. Data quality is simply about making sure your information is clear, correct, and ready to use. It means fewer mistakes, faster work, happier customers, and the confidence to move your business forward. In a world overflowing with information, focusing on quality ensures your data is a true advantage, not a hidden problem.