What is Data Lakehouse Architecture?

Data lakehouse architecture is a modern way of storing and managing data that solves some of the biggest problems businesses face when working with large amounts of information.

In the past, companies used two different systems:

- A data warehouse stores clean and organized data that is used to create reports and charts. It was fast, but expensive, and worked best only with structured data.

- A data lake to store all kinds of raw data, including files, videos, or logs. It was cheaper and more flexible, but didn’t support reliable analysis.

The Data lakehouse combines both systems in one.

It allows businesses to store all their data in one place, similar to a data lake, but with the ability to organize, clean, and analyze it easily, like a data warehouse.

With this setup, teams don’t need to copy data between systems. They can work with fresh data, run reports, build machine learning models, and make decisions faster.

In short, data lakehouse architecture gives businesses a simpler and more affordable way to handle all types of data while still getting accurate and reliable results.

The 5 Key Layers of Data Lakehouse Architecture

Data lakehouse architecture allows organizations to manage both structured and unstructured data within a single, scalable system. It supports diverse use cases like business reporting, real-time analytics, and machine learning, all within a single system. This flexibility is made possible through five foundational layers that work together to collect, store, manage, and deliver data efficiently.

Here’s a detailed look at each layer:

1. Ingestion Layer

The ingestion layer is responsible for collecting data from various internal and external sources and bringing it into the lakehouse. These sources might include:

- a. Traditional databases (like MySQL, PostgreSQL)

- b. Cloud data services (like Salesforce or Google Analytics)

- c. Streaming platforms (like Apache Kafka or Amazon Kinesis)

- d. NoSQL systems (like MongoDB or Cassandra)

- e. APIs and flat files (CSV, JSON, XML)

Based on business requirements, data can be ingested either in real-time or through scheduled batch processes. This layer ensures all data, no matter its source or format, enters the system in a controlled, consistent manner, ready for storage and further processing.

2. Storage Layer

Once the data arrives, it’s placed in a central storage location designed for scalability and cost-efficiency. Most modern lakehouse setups use cloud-based object storage systems like Amazon S3 or Azure Data Lake Storage.

The storage layer holds raw, semi-processed, and processed data in open formats such as Parquet or Avro. These formats are optimized for analytics and make it easier to access the data using different tools and platforms. Storage is decoupled from compute, meaning the system can scale independently depending on workload needs.

3. Metadata Layer

This layer provides clarity on the data’s structure, context, and lineage for both the system and its users. It tracks details such as:

- Table schema (column names, types, etc.)

- Data versions and changes over time

- Transformation steps and job logs

- Data quality metrics and historical issues

- Data lineage (where the data came from and how it has been changed)

Beyond simply describing data, the metadata layer adds intelligence to the system. It supports key features like schema validation, transaction handling (ACID), time travel (viewing past versions of data), and efficient indexing. This enhances the reliability of data operations and simplifies governance.

4. API and Access Layer

The API layer allows users and applications to communicate with the lakehouse. Whether it’s a BI tool, a machine learning pipeline, or a custom-built app, APIs allow these systems to read, write, and update data efficiently.

This layer is essential for integrating with tools like Power BI, Tableau, or custom dashboards. APIs also support streaming consumption, where systems can process data as it arrives. This is especially useful for real-time analytics, fraud detection, and monitoring systems that rely on up-to-date data.

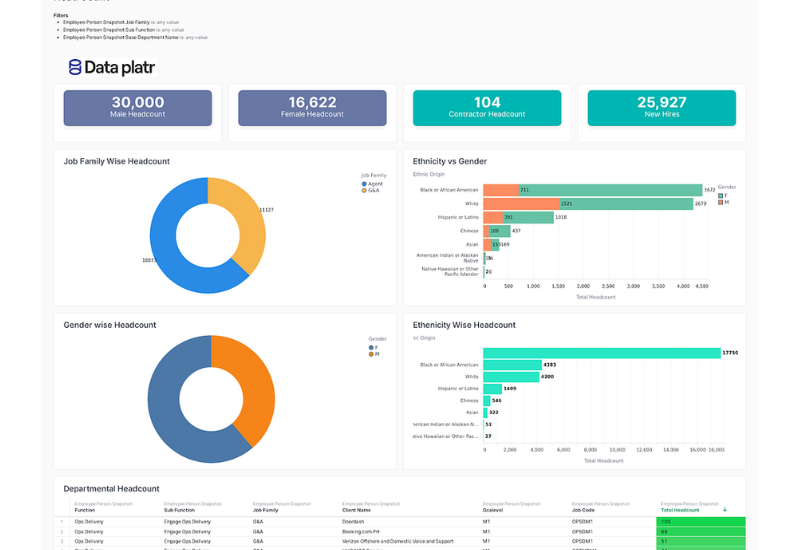

5. Data Consumption Layer

This is the layer where the data becomes truly useful. It enables teams, such as analysts, data scientists, and business users, to interact with the data using tools and applications they’re familiar with.

Through this layer, users can:

- a. Build and view dashboards

- b. Write SQL queries

- c. Create predictive models

- d. Visualize trends and metrics

- e. Pull insights for decision-making

The consumption layer supports a wide range of workloads and ensures that everyone in the organization, from technical users to non-technical stakeholders, can benefit from the same source of truth.

The five layers of data lakehouse architecture work together to create a unified platform that can handle the entire data lifecycle. From collecting raw data to delivering actionable insights, each layer plays a vital role in ensuring the system remains efficient, scalable, and accessible.

By integrating these layers into a single architecture, businesses can simplify their data infrastructure while still supporting advanced analytics and innovation.

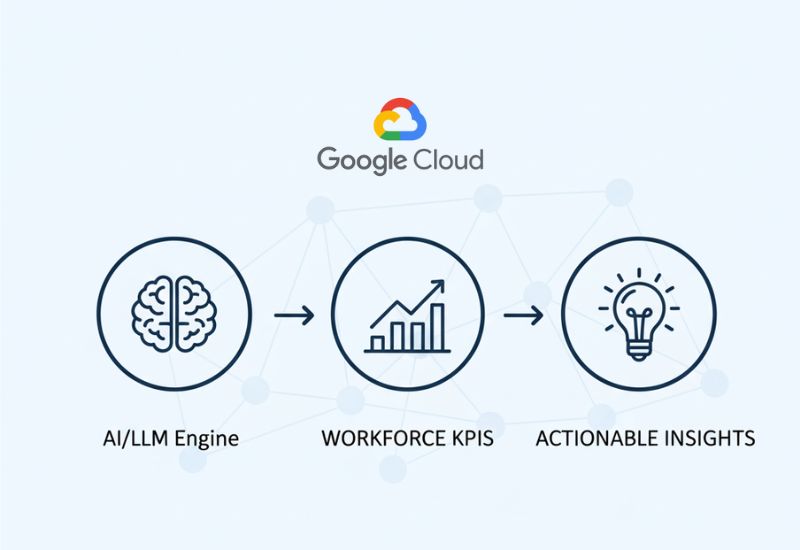

How Data Lakehouse Architecture Supercharges GenAI

Generative AI (GenAI) thrives on large volumes of diverse, high-quality data. But to truly harness its potential, organizations need a modern data foundation that supports scale, speed, and structure. This is where data lakehouse architecture becomes a game-changer.

A lakehouse architecture merges the flexibility of data lakes with the data management features of data warehouses, enabling seamless access to both structured and unstructured data. This hybrid approach makes it ideal for GenAI models that require a constant flow of clean, well-organized, and real-time data for training and inference.

With platforms like Databricks lakehouse architecture and Azure Data Lakehouse Architecture, businesses can streamline data pipelines, reduce latency, and unify data silos to create a single source of truth. This allows AI teams to build, test, and deploy GenAI applications much faster.

In environments like AWS Lake House Architecture or Azure Databricks Lakehouse Architecture, data engineers and scientists can work together using open formats like Delta Lake and Apache Hudi Architecture. These formats simplify version control and enable time travel, which is important for GenAI model training and auditability.

By leveraging the data lakehouse architecture, organizations not only reduce data duplication and storage costs but also empower GenAI systems with real-time insights, context-rich information, and scalable computing power.

Whether you're exploring Oracle Lakehouse Architecture, Amazon Redshift Lake House Architecture, or Delta Lakehouse Architecture, the principle remains the same: unifying your data layers allows you to unlock the full potential of GenAI.

Conclusion

As data continues to grow in volume, variety, and complexity, businesses need a smarter way to manage and use it. Data lakehouse architecture offers a powerful solution by combining the strengths of data lakes and data warehouses into a single, unified platform. It simplifies data infrastructure, reduces costs, and supports both traditional analytics and advanced AI workloads.

With its layered design, from ingestion to consumption, the lakehouse provides a strong foundation for real-time insights, machine learning, and scalable data applications. As technologies like GenAI evolve, the need for high-quality, well-governed, and accessible data will only increase.

By adopting data lakehouse architecture, organizations can future-proof their data strategy, improve decision-making, and unlock the full value of their data across cloud platforms like AWS, Azure, and Databricks.