1. Introduction: The Unsung Hero of Financial Transformation

Finance digital transformation is often focused on the exciting outcomes: predictive forecasting, fraud detection, and conversational AI. However, the success of any advanced AI capability rests entirely on the quality of its input data. For subscription businesses, financial data is often fragmented, plagued by inconsistent formats, non-standard headers, and unexpected null values from disparate source systems like ERPs and legacy reporting tools.

This article focuses on the critical first step in building a modern financial platform: Data Ingestion and Cleansing. By establishing a self healing data pipeline, an automated process that identifies, manages, and fixes data quality issues on its own, we can guarantee the high-integrity foundation required for reliable, AI-driven financial insights.

Benefits of a High-Integrity Data Foundation

- A. Reliable AI Models: Machine Learning models (for forecasting, CLV, etc.) perform poorly with 'dirty' data. Clean data ensures accurate predictions.

- B. Reduced Audit Risk: A standardized, traceable, and error-free data set simplifies compliance and internal audits.

- C. Faster Time-to-Insight: Eliminating manual data preparation cuts down the time the finance team spends normalizing spreadsheets and correcting errors.

2. Deep Dive: Data Ingestion and Cleansing

The journey from raw data to analytical insight begins with a robust ETL (Extract, Transform, Load) process, typically handled on Google Cloud by services like Cloud Data Fusion and anchored by BigQuery. But simple migration is not enough; the 'T' (Transformation/Cleansing) phase must be intelligent and resilient.

This phase is designed to solve the "Key Problem" of structural complexity: fragile report formats that constantly break the ingestion process.

The AI's "Dirty Data" Problem

The success of any AI in finance depends on the quality of its data. For subscription businesses, financial data is often a fragmented mix of inconsistent formats, non-standard headers, and null values. This "dirty data" breaks AI models and slows down crucial insights.

Composition of Typical Legacy Data

A significant portion of incoming data is Often unusable without manual intervention. This chart illustrates a common breakdown, where format errors, missing headers, and null values compromise data integrity and halt automated processes.

The Core Challenge: Header and Structural Complexity

Legacy reports are often optimized for human readability rather than machine ingestion. This results in fragile report formats where column headers are inconsistent, verbose, or span multiple lines.

The critical solution here is Header Normalization, which systematically enforces uniformity across all data sources.

Structural Challenge: Header Normalization

The cleansing process must apply consistent rules: trim excess spaces, convert headers to a uniform case (e.g., snake_case), and replace special characters with underscores (_).

Handling Data Inconsistency and Nulls

Even when the structure is fixed, individual data points often violate analytical standards, requiring automated repair for consistency and completeness.

Data Inconsistency & Non-Standardized Formats

Financial data arrives with a variety of non-standard formats that prevent immediate calculation or sorting:

- A. Date Standardization: Dates can be formatted as 05-02-2024, 5/2/2024, or May 2, 2024. The pipeline must convert all instances to a canonical ISO format (e.g., YYYY-MM-DD).

- B. Numeric Correction: Currency symbols ($, €), thousands separators (, or .), and text (e.g., [NoneType]) must be stripped or converted to ensure the field contains only a pure numeric value ready for mathematical aggregation.

Fill Down - Forward Filling (FFILL) for Context

In many legacy financial extracts, key contextual identifiers (like a master Account ID/Lease number or Reporting Period) are only listed on the first line of a transaction group, leaving subsequent rows null. FFILL is a technique that addresses these null values by automatically propagating the last valid, non-null value forward to fill the blanks.

This ensures every transactional detail row is explicitly linked to its parent context, enabling accurate relational analysis and hierarchical reporting.

Multi-line Records

Legacy systems often split what should be a single logical record across multiple physical lines in a file. This is common in complex journal entries or when fields contain unexpected line breaks.

The transformation pipeline must identify the logical start and end of a record, stitching together fragments and collapsing them into a single, clean row that adheres to the analytical schema. For example, consolidating data split by an unwanted carriage return (\r\n) into a single, comprehensive record before schema mapping is a key challenge in this stage.

Input:

Output:

Ensuring Traceability: Metadata Injection

Another exception that we see very frequently is metadata available in the source files, which can be the filters applied while exporting files from the application.

By extracting and injecting this metadata, the pipeline achieves 100% Traceability, creating an audit trail that supports real-time anomaly detection and simplifies compliance checks, effectively transforming the data into a high-integrity asset.

Input:

Output:

Curating Data for Hierarchical Intelligence

Beyond simple cleansing, this process is essential for unlocking Structural Intelligence.

The self healing data pipeline automatically:

- A. Identifies Parent-Child Relationships: For subscription businesses, this might mean automatically linking line-item revenue records to their corresponding master subscription account or customer segment, ensuring immediate, accurate drill-down capability.

- B. Validates Data Hierarchies: It confirms that every child record correctly rolls up to a valid parent, eliminating reporting discrepancies caused by manual linking errors.

Handling Exceptions and Self-Healing Automation

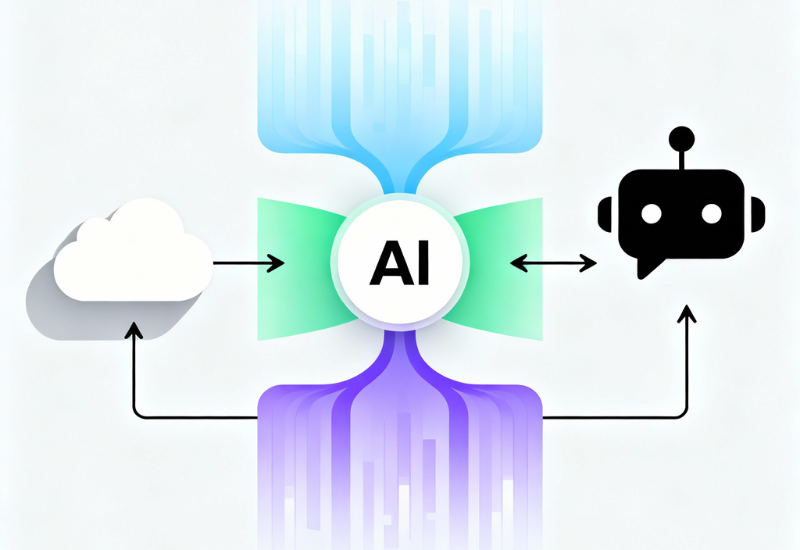

The most significant challenge is managing exceptions, the unexpected, non-standard data that bypasses the rules and causes pipeline failures. This is where the concept of a self healing automation pipeline comes in, leveraging Generative AI (GenAI) to fix the code that cleans the data.

The Self-Healing Data Pipeline Process - Two-Tiered Approach:

The traditional pipeline breaks when it encounters an unhandled exception (e.g., a new currency symbol appears, a date format changes upstream, syntax errors).

Tier 1: Data-Level Self-Healing (Retry Mechanism)

- A. Context: This initial self-healing occurs during the data quality check phase (e.g., anomaly detection and transformation).

- B. Trigger: If there is an error in the generated code like syntax Error it goes into Retry Mechanism. Activated when data-specific issues arise, such as a syntax error, new, unrecognised currency symbol, an unexpected date format change from an upstream source.

- C. Mechanism: The pipeline is designed to retry the processing step up to two times. During each retry, it dynamically recalls the specifics of the previous error.

- B. Outcome: By intelligently adjusting its approach based on past failures, the system attempts to resolve these data-level inconsistencies and "heal" itself, allowing the data to proceed successfully.

Tier 2: Code-Level Self-Healing Data Pipeline (Gen AI Repair)

- A. Context: When the generated code runs successfully on sample data but fails on whole original data then GenAI Repair is initiated. This more advanced self-healing mechanism is engaged when the pipeline encounters unexpected or unhandled exceptions that cause an outright failure, beyond what simple retries can fix.

- B. Error Detection: The data pipeline runs and fails due to an unhandled exception (e.g., list index out of range, Recursion Error: maximum recursion depth exceeded, Memory Error: Unable to allocate ... bytes , Overflow Error: Python int too large to convert to C long, Index Error: list index out of range').

- C. Failure Analysis: The pipeline is instrumented to capture the exact error message, the surrounding code snippet, and the problematic piece of raw data causing the failure.

- D. GenAI Repair Prompt: This comprehensive failure information is securely fed to a Generative AI model (like a specialized version of Gemini) with a specific system instruction: "You are a Python code repair engine. Based on the error and the input data, write the minimal code change necessary to resolve the exception and return the correct, runnable function."

- C. Code Repair: The GenAI model analyzes the failure and intelligently writes the Repair Function—the corrected code logic that can now handle the new, unexpected exception that caused the original pipeline failure.

- D. Re-Deployment & Reprocessing: The newly repaired code is automatically deployed back into the pipeline, and the failing data batch is reprocessed using the corrected logic.

- E. Outcome: The pipeline successfully runs with the reprocessed data, achieving "Post Self Heal: Code ran successfully." This signifies that the system has autonomously diagnosed and resolved a complex, structural code issue, ensuring continuous operational flow.

3. Benefits of This Resilient Process

4. Summary of the End-to-End Implementation

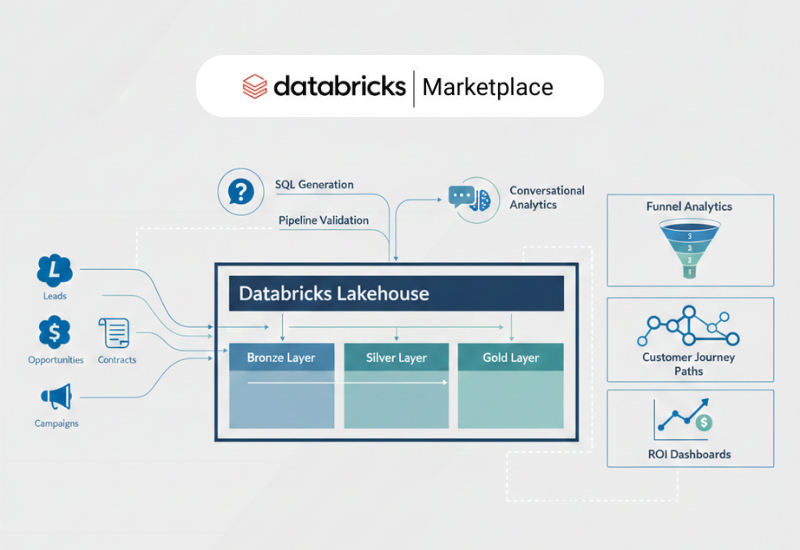

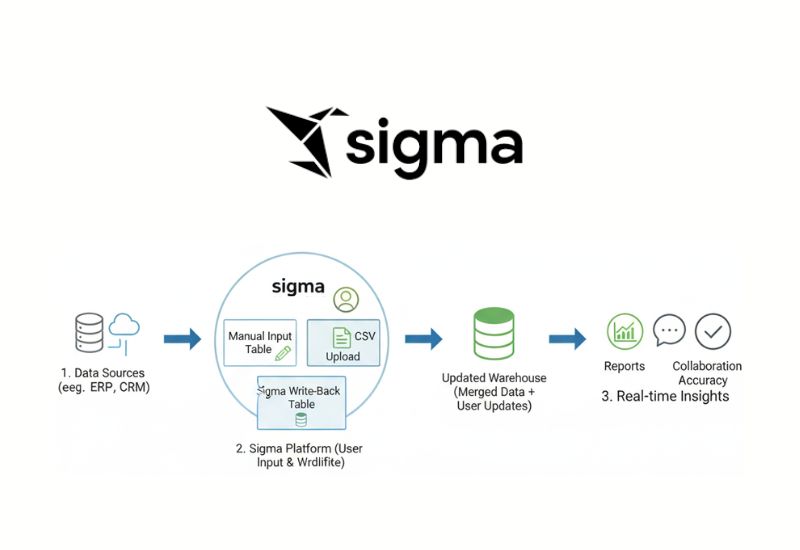

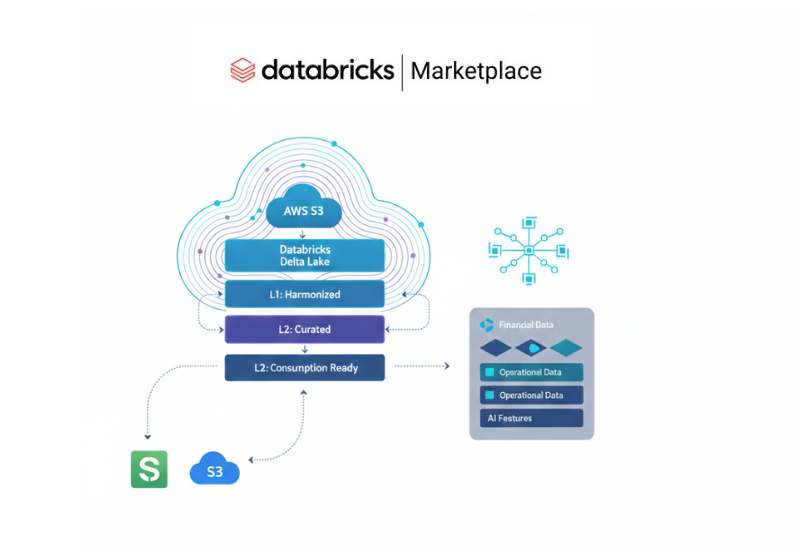

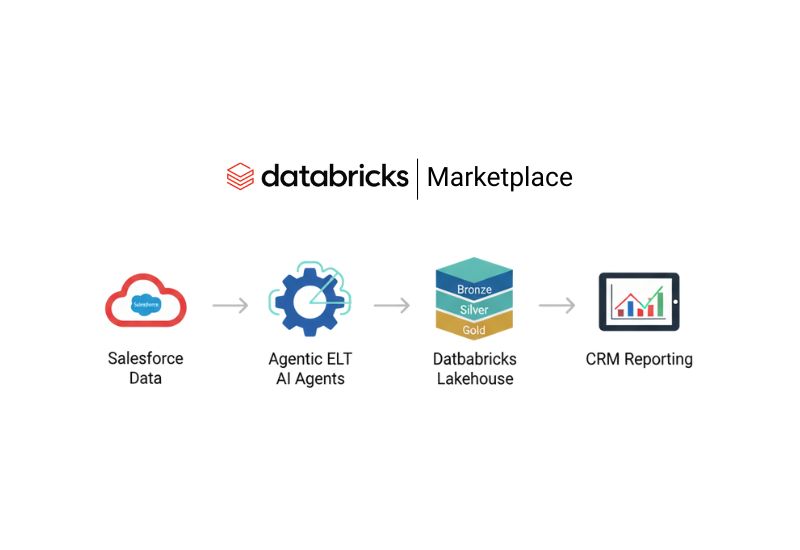

The Agentic AI Pipeline initiates by securely ingesting critical financial data from disparate sources, such as Oracle into BigQuery. As the data arrives, it is immediately subjected to a rigorous quality check: the pipeline first applies anomaly detection and transformation to correct minor issues and formatting inconsistencies. However, the system's true resilience emerges when an unexpected exception or fault causes the standard process to fail. At that critical point, an integrated, intelligent self-healing mechanism automatically intervenes to diagnose and resolve complex or stubborn structural issues. This unique, autonomous feature ensures that critical data gaps are corrected instantly, making the pipeline not just robust and secure, but capable of actively managing its own health to guarantee a continuous operational flow. Then clean, unified data is then passed to Vertex AI for training predictive models (like cash flow and CLV forecasting) and is visualized in Looker. Crucially, the cleansed data, now standardized and validated, becomes the single source of truth that powers the downstream analytical tools and the GenAI conversational interfaces, moving the organization from fragile data preparation to robust, strategic execution.

Curious about the process, get more details on https://dataplatr.com/blog/data-pipeline-automation

Your Data Is Now a Strategic Asset

By investing in a self healing data pipeline, you eliminate "dirty data" as a bottleneck. You transform finance from a reactive reporting function into a proactive, strategic partner, all powered by data you can finally trust.

👉 Book a demo | Contact our team | Explore more success stories